Source

| [Running Ollama on Google Colab (Free Tier): A Step-by-Step Guide | by Anoop Maurya | May, 2024 | Medium](https://medium.com/@mauryaanoop3/running-ollama-on-google-colab-free-tier-a-step-by-step-guide-9ef74b1f8f7a) |

MMLU-Pro dataset: ‘TIGER-Lab/MMLU-Pro’ [2406.01574] MMLU-Pro: A More Robust and Challenging Multi-Task Language Understanding Benchmark (arxiv.org)

Introduction

因爲想要發展大小模型:小模型使用 Llama3-8B 或是 Mistral-7B,大模型則用 gpt-4o (> 100B?)。

第一步先建立 baseline. 使用 MMLU Pro subset.

mmlu_pro_ollama_llama3.ipynb and mmlu_pro_gpt.ipynb

| 大模型 | 小模型 | |

|---|---|---|

| MMLU | gpt-4o | |

Baseline

| GPT3.5 | GPT4o | Llama3-8B | Mistral-7B | |

|---|---|---|---|---|

| History, 381 | 44% | 43% | 27%-31% | 15%-28% |

Colab program: mmlu_gpt4o.ipynb

MMLU Pro Dataset

第一步是 load dataset. 這裏使用 TIGER-Lab/MMLU-Pro, 基本是一個 MMLU 的 subset.

1 | |

dataset 的結構如下:

1 | |

完整的 MMLU 簡化後 category 如下。

1 | |

一個記錄如下

1 | |

Open AI API

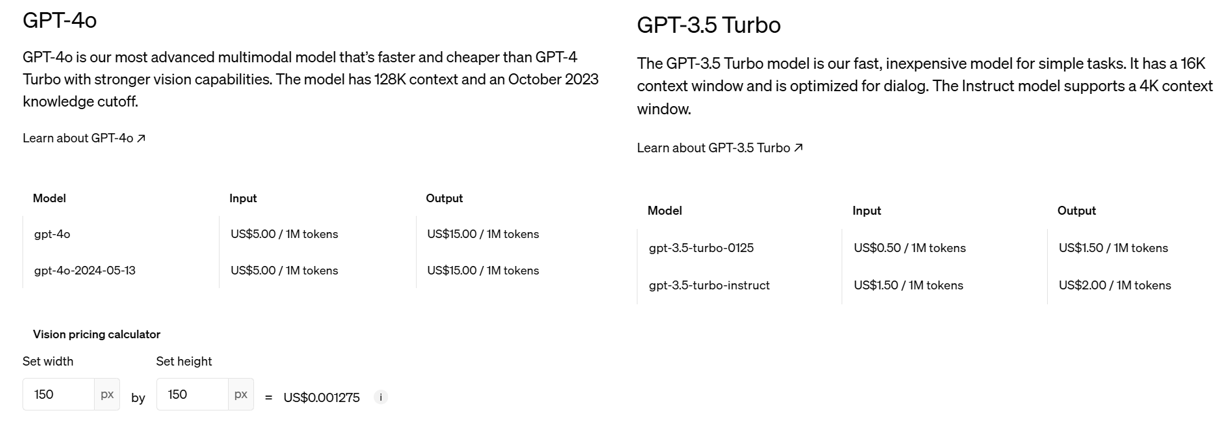

2024/6/19 GPT-4o 和 GPT-3.5 Turbo 的價格差。

GPT-3.5 Turbo 便宜 10 倍,但是準確率也比較差。

GPT 設定

第二步是調用 gpt API,幾個重點:

- 設定模型本身:

- model = “gpt-4o” or “gpt-3.5-turbo-0125”

- 溫度: T = 0 是 greedy decode. T = 0.1 還是以穩定爲主。如果 T = 1 則是創意爲主。

- max_tokens: 最大的 context length, 也就是 KV cache size

- top_p, frequency_penalty, presence_penalty: ?

- 使用結構化 message 的 input prompt:

- role: 訂人設

- content: text 的 question (實際的 input)。應該可以加上 image 的content.

-

回傳 reponse, 其結構應該和 input prompt 一樣

-

message.content: 這是 answer

-

choices? 是同時產生幾個不同的 choices?

-

1 | |

1 | |